Imagine if you could enter a play by putting on a virtual headset. Now imagine that the characters in the play are shaking your hand or giving you a hug, and that you can feel them do these things.

This is the kind of experience that researchers at Queen’s University are developing through the “Meta-Physical Theatre: Designing Physical Interactions in Virtual Reality Live Performances Using Robotics and Smart Textiles” project. In a nutshell, the project integrates physical touch into virtual reality (VR) live performances.

Dr. Matthew Pan is the lead researcher on the project. Dr. Pan is an Assistant Professor in the Faculty of Engineering and Applied Science and a member of Ingenuity Labs Research Institute at Queen’s. He was one of six inaugural recipients of a Connected Minds seed grant in 2024, supporting community-focused research that pushes boundaries in technology and society.

Virtual Reality Beyond the Visual

In association with intersectional arts organizations, this project aims to build immersive narratives where participants can not only see and hear virtual characters but can also physically interact with them. It pushes boundaries on VR environments to build immersive environments where touch becomes a part of the narrative structure.

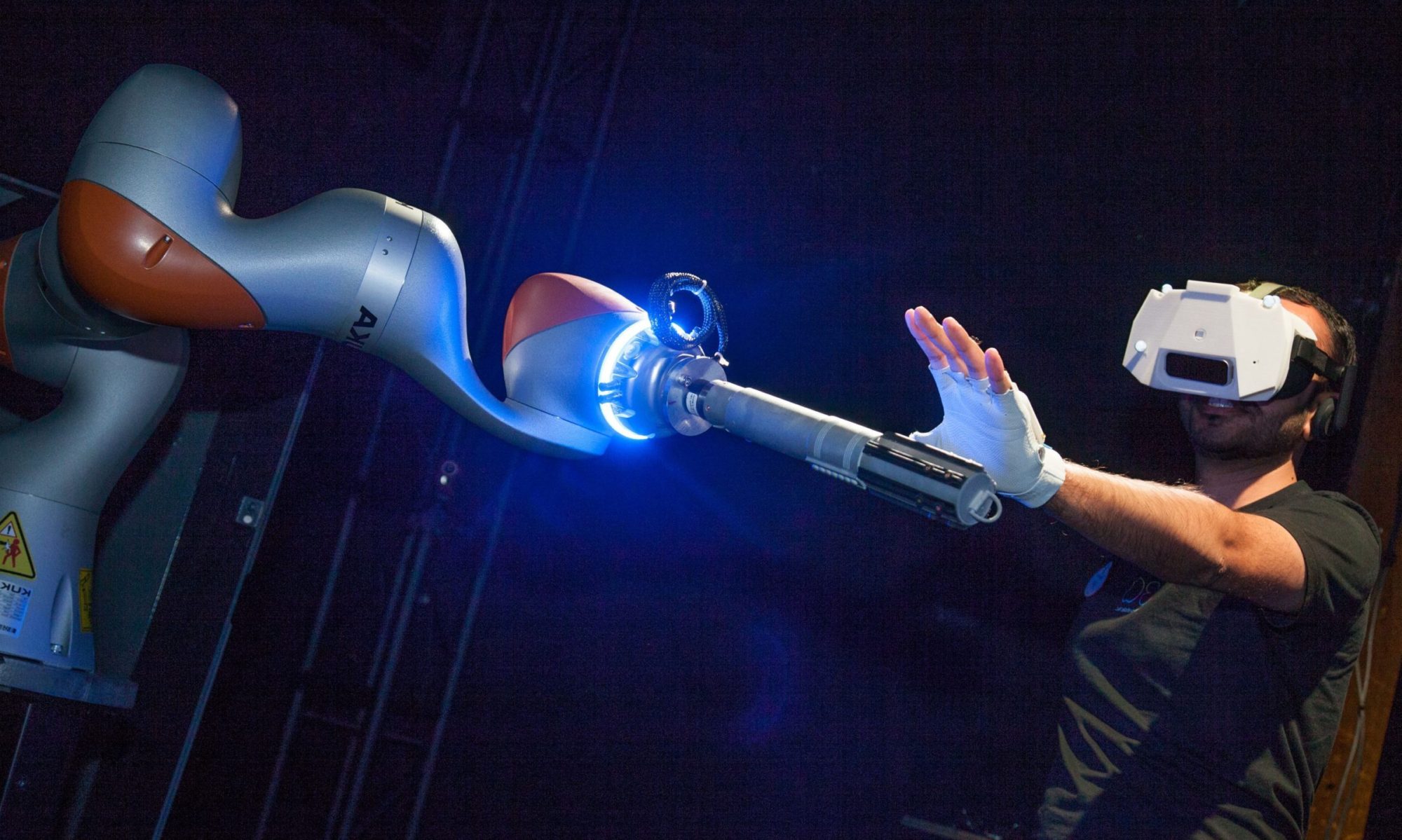

Dr. Pan’s idea began years earlier during his time at Disney. While working on Star Wars: Galaxy’s Edge, he developed an immersive experience where visitors could feel an iconic “force grab” (when a Jedi summons a lightsabre through the air). “You would put on a VR headset, and you would see, in the distance, this lightsabre that you can reach out to with your hand and it would start zooming toward you,” he explains. “You would actually see the lightsabre come into your hand in VR. At the same time, a robot in the real world would deliver a lightsabre prop with the exact same timing and force.” Though the project was ultimately shelved by Disney, Dr. Pan didn’t give up on the idea. “I thought there was a lot left on the table by shelving that project.”

Making Touch Feel Real in VR

Of course, there’s no VR theatre without theatre, and Dr Pan’s collaboration with Michael Wheeler is essential to the project. Wheeler is a fellow Ingenuity Labs and Connected Minds member, Assistant Professor in the DAN School of Drama and Music, and Director of Artistic Research at SpiderWebShow Performance. “Shortly after arriving at Queen’s, I was introduced to Michael… we thought it would be really cool to actually have a theatrical narrative that uses interpersonal interactions in VR,” Dr Pan says. Supported by community organizations, Dr. Pan and Wheeler co-created a live VR theatre experience that integrates physical touch. “It’s a high risk, high reward project that Connected Minds was willing to fund.

“[We are] creating this narrative that involves physical interactions with virtual characters. [We are] starting out simple, we’re looking at simple interactions like high fives, or fist bumps, and handovers of objects where you don’t necessarily need a lot of fidelity in terms of physical interactions.”

To make these moments feel real, the team uses haptic proxies. As Dr. Pan explains, haptic proxies are physical props that ‘stand in for haptic interactions you would normally feel in the real world.” For example, a robot-mounted hand can simulate a high five at the exact moment the participant sees it in VR.

However, matching physical actions in the world and virtual actions in VR creates a major technical challenge. The system must align spatial coordinates using motion capture and high-fidelity 3D pose tracking, so that the location of the proxy in the real world matches the location where the VR headset thinks you should be.

Timing matters too. The team must also synchronize physical and virtual actions on the scale of milliseconds. “For dynamic experiences, it’s even more complicated,” Dr. Pan explains. “Particularly for handovers or high fives, there needs to be not only a physical correlation, but also a temporal correlation. You can’t have the high five happen in VR first, followed by it happening 500 milliseconds later in the physical world. It breaks the illusion.” To avoid this lag, the team uses a system that shares information between the VR environment and robotic devices to keep latency low and synchronization precise.

Collaboration Across Disciplines

The project is supported by two arts organizations: SpiderWebShow Performance and bCurrent Performing Arts. The former is a Kingston-based arts organization and Canada’s first live-to-digital performance company. It focuses on exploring the intersection of live performance and digital technology. “With SpiderWebShow, we work with Adrienne Wong, who is contributing to the dramaturging” says Dr. Pan. bCurrent is a Toronto-based company that supports the work of Black and intersectional artists and plays a role in shaping the narrative voice of the theatrical experience. Together, these collaborators ensure that the narrative experience is inclusive and culturally relevant.

For Dr. Pan, it’s important that the creative process among engineers and artists is authentic. “We are emphasizing the co-creative nature of this project … [Michael and I] talk about these experiences at length and we have many ideas on what we eventually want to do with this technology, but one of the most important steps is we’re not leaving each other out in the dark.”

Beyond Theatre: Next Steps

Dr. Pan has big ideas on where the project and technology could eventually go.

“We already have inquiries into sports training,” he says. “There’s lots of implications for being able to customize training regimens for athletes.” For instance, being able to train a hockey goalie in a safe and replicable environment without needing live opponents or expensive setups would be helpful to coaches.

The technology could also support hands-on training for skilled trades, with the potential to lower barriers to technical training and to improve safety. “We could use a robot to mimic a lathe, and then do operator training in VR, especially when there is a shortage of machine equipment or safety concerns, we could have novices training with haptic proxies before moving on to the physical machine.”

Beyond performance and training, Dr. Pan is also excited about its application to care for the elderly and combatting loneliness. He’s been speaking to Dr. Lora Appel, a Connected Minds researcher at York University, who studies VR in palliative and geriatric care. “Loneliness is a huge issue with the elderly population,” he explains. “[We are exploring] if we can use this technology to make social connections.”

Conclusion

In a world where screens mediate much of our social world, Dr. Pan asks: what if we could reclaim a sense of touch, even through a headset? His project brings together engineering, art, performance and community, showing that immersive technology isn’t about tricking the eye, it’s about restoring presence and human connection.